Students, (and faculty): it is time to get serious about Generative AI. According to the World Economic Forum, 70% of the skills used in most jobs will change due to AI. In colleges, The vast majority of students use AI for homework and find it more helpful than traditional tutors.

The rapid adoption and development of AI has rocked higher education and thrown into doubt many students’ career plans and as many professors’ lesson plans. The best and only response is for students to develop capabilities that can never be authentically replicated by AI because they are uniquely human. Only humans have flesh and blood bodies. And these bodies are implicated in a wide range of Uniquely Human Capacities (UHCs), such as intuition, ethics, compassion, and storytelling. Students and educators should reallocate time and resources from AI-replaceable technical skills like coding and calculating to developing UHCs and AI skills.

Adoption of AI by employers is increasing while expectations for AI-savvy job candidates are rising. College students are getting nervous. 51% are second guessing their career choice and 39% worry that their job could be replaced by AI, according to Cengage Group’s 2024 Graduate Employability Report. Recently, I heard a student at an on-campus Literacy AI event ask an OpenAI representative if she should drop her efforts to be a web designer. (The representative’s response: spend less time learning the nuts and bolts of coding, and more time learning how to interpret and translate client goals into design plans.)

At the same time, AI capabilities are improving quickly. Recent frontier models have added “deep research” (web search and retrieval) and “reasoning” (multi-step thinking) capabilities. Both produce better, more comprehensive, accurate and thoughtful results, performing broader searches and developing responses step-by-step. Leading models are beginning to offer agentic features, which can do work for us, such as coding, independently. American AI companies are investing hundreds of billions in a race to develop Artificial General Intelligence (AGI). This is a poorly defined state of the technology where AI can perform at least as well as humans in virtually any economically valuable cognitive task. It can act autonomously, learn, plan, and adapt, and interact with the world in a general flexible way, much as humans do. Some experts suggest we may reach this point by 2030, although others have a longer timeline.

Hard skills that may be among the first to be replaced are those that AI can do better, cheaper, and faster. As a general-purpose tool, AI can already perform basic coding, data analysis, administrative, routine bookkeeping and accounting, and illustration tasks that previously required specialized tools and experience. I have my own mind-blowing “vibe-coding” experience, creating custom apps with limited syntactical coding understanding. AIs are capable of quantitative, statistical, and textual analysis that might have required Excel or R in the past. According to Deloitte, AI initiatives are touching virtually every aspect of a companies’ business, affecting IT, operations, marketing the most. AI can create presentations driven by natural language that make manual PowerPoint drafting skills less essential.

Humans’ Future-Proof Strategy

How should students, faculty and staff respond to the breathtaking pace of change and profound uncertainties about the future of labor markets? The OpenAI representative was right: reallocation of time and resources from easily automatable skills to those that only humans with bodies can do. Let us spend less time teaching and learning skills that are likely to be automated soon.

| Technical Skills OUT | Uniquely Human Capacities IN |

| Basic coding | Mindfulness, empathy, and compassion |

| Data entry and bookkeeping | Ethical judgment, meaning making, and critical thinking |

| Routine data analysis | Intuition, storytelling, aesthetic sense, computational thinking |

| Administrative tasks | Collaboration and leadership |

| Mastery of single-purpose software (e.g., PowerPoint, Excel, accounting apps) | Authentic and ethical use of generative and other kinds of AI to augment UHCs |

Instead, students (and everyone) should focus on developing Uniquely Human Capacities (UHCs). These are abilities that only humans can authentically perform because they need a human body. For example, intuition is our inarticulable and immediate knowledge that we know somatically, in our gut. It is how we empathize, show compassion, evaluate morality, listen and speak, love, appreciate and create beauty, play, collaborate, tell stories, find inspiration and insight, engage our curiosity, and emote. It is how we engage with the deep questions of life and ask the really important questions.

According to Gholdy Muhammad in Unearthing Joy, a reduced emphasis on skills can improve equity by creating space to focus on students’ individual needs. She argues that standards and pedagogies need to also reflect “identity, intellectualism, criticality, and joy.” These four dimensions help “contextualize skills and give students ways to connect them to the real world and their lives.”

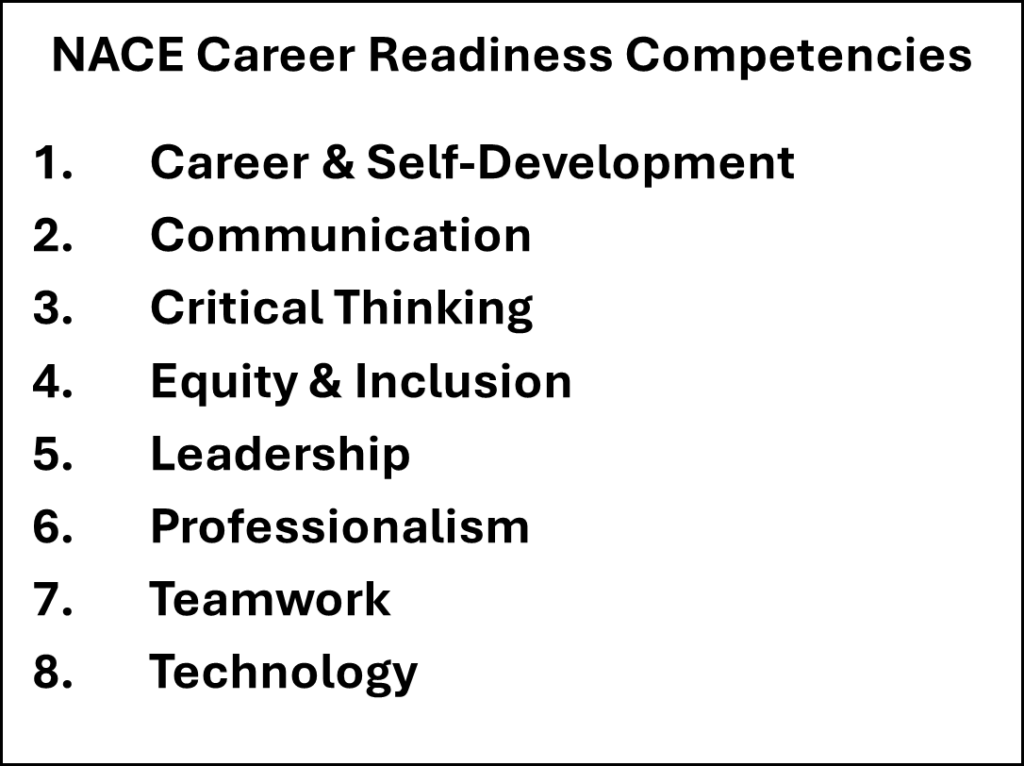

The National Association of Colleges and Employers has created a list of eight career readiness competencies that employers say are necessary for career success. Take a look at the list below and you will see that seven of the eight are UHCs. The eighth, technology, underlines the need for students and their educators to understand and use AI effectively and authentically.

For example, an entry-level finance employee who has developed their UHCs will be able to nimbly respond to changing market conditions, interpret the intentions of managers and clients, and translate these into effective analysis and creative solutions. They will use AI tools to augment their work, adding greater value with less training and oversight.

Widen Humans’ Comparative Advantage

As demonstrated in the example above, our UHCs are humans’ unfair advantage over AI. How do we develop them, ensuring the employability and self-actualization of students and all humans?

The foundation is mindfulness. Mindfulness is about being fully present with ourselves and others, and accepting, primarily via bodily sensations, without judgment and preference. It allows us to accurately perceive reality, including our natural intuitive connection with other humans, a connection AI cannot share. Mindfulness can be developed during and beyond meditation, moments of stillness devoted to mindfulness. Mindfulness practice has been shown to improve self-knowledge, set career goals, and improve creativity.

Mindfulness supports intuitive thinking and metacognition, our ability to think clearly about thinking. Non-conceptual thinking, using our whole bodies, entails developing our intuition and a growth mindset. The latter is about recognizing that we are all works in progress, where learning is the product of careful risk-taking, learning from errors, supported by other humans.

These practices support deep, honest, authentic engagement with other humans of all types. (These are not available over social media.) For students, this is about engaging with each other in class, study groups, clubs, and elsewhere on campus, as well as engaging with faculty in class and office hours. Such engagement with humans can feel unfamiliar and awkward as we emerge from a pandemic. However, these interactions are a critical way to practice and improve our UHCs.

Literature and cinema are ways to engage with and develop empathy and understanding of humans you do not know, may not even be alive or even exist at all. Fiction is maybe the only way to experience in the first person what a stranger is thinking and feeling.

Indeed, every interaction with the world is an opportunity to practice those Uniquely Human Capacities (UHCs):

- Use your imagination and creativity to solve a math problem.

- Format your spreadsheet or presentation or essay so that it is beautiful.

- Get in touch with the feelings that arise when faced with a challenging task.

- Many students tell me they are in college to better support and care for family. As you do the work, let yourself experience as an act of love for them.

AI Can Help Us Be Better Humans

AI usage can dull our UHCs or sharpen them. Use AI to challenge us to improve our work, not to provide short cuts that make our work average, boring, or worse. Ethan Mollick (2024) describes the familiar roles AIs can profitably play in our lives. Chief among these is as a patient, always available, if sometimes unreliable tutor. A tutor will give us helpful and critical feedback and hints but never the answers. A tutor will not do our work for us. A tutor will suggest alternative strategies and we can instruct them to nudge us to check on our emotions, physical sensations and moral dimensions of our work. When we prompt AI for help, we should explicitly give it the role of a tutor or editor (as I did with Claude for this article).

How do we assess whether we and our students are developing their UHCs? We can develop personal and work portfolios that tell the stories of connections, insights, and benefits to society we have made. We can get honest testimonials of trusted human partners and engage in critical yet self-compassionate introspection, and journalling. Deliberate practice with feedback in real life and role-playing scenarios can all be valuable. One thing that will not work as well: traditional grades and quantitative measures. After all, humanity cannot be measured.

In a future where AI or AGI assumes the more rote and mechanical aspects of work, we humans are freed to build their UHCs, to become more fully human. An optimistic scenario!

What Could Go Wrong?

The huge, profit-seeking transnational corporations that control AI may soon feel greater pressure to show a return on enormous investment to investors. This could cause costs for users to go up, widening the capabilities gap between those with means and the rest. It could also result in Balkanized AI, where each model is embedded with political, social, and other biases that appeal to different demographics. We see this beginning with Claude, prioritizing safety, and Grok, built to emphasize free expression.

In addition, AI could get good enough at faking empathy, morality, intuition, sense making, and other UHCs. In a competitive, winner-take-all economy with even less government regulation and leakier safety net, companies may aggressively reduce hiring at entry level and of (expensive) high performers. Many of the job functions of the former can be most easily replaced by AI. Mid-level professionals can use AI to perform at a higher level.

Finally, and this is not an exhaustive list: Students and all of us may succumb to the temptation of using AI short cut their work, slowing or reversing development of critical thinking, analytical skills, and subject matter expertise. The tech industry has perfected, over twenty years, the science of making our devices virtually impossible to put down, so that we are “hooked.”

Keeping Humans First

The best way to reduce the risks posed by AI-driven change is to develop our students’ Uniquely Human Capacities while actively engaging policymakers and administrators to ensure a just transition. This enhances the unique value of flesh-and-blood humans in the workforce and society. Educators across disciplines should identify lower value-added activities vulnerable to automation and reorient curricula toward nurturing UHCs. This will foster not only employability but also personal growth, meaningful connection, and equity.

Even in the most challenging scenarios, we are unlikely to regret investing in our humanity. Beyond being well-employed, what could be more rewarding than becoming more fully actualized, compassionate, and connected beings? By developing our intuitions, morality, and bonds with others and the natural world, we open lifelong pathways to growth, fulfillment, and purpose. In doing so, we build lives and communities resilient to change, rich in meaning, and true to what it means to be human.

The article represents my opinions only, not necessarily those of the Borough of Manhattan Community College or CUNY.

Brett Whysel is a lecturer in finance and decision-making at the Borough of Manhattan Community College, CUNY, where he integrates mindfulness, behavioral science, generative AI, and career readiness into his teaching. He has written for Faculty Focus, Forbes, and The Decision Lab. He is also the co-founder of Decision Fish LLC, where he develops tools to support financial wellness and housing counselors. He regularly presents on mindfulness and metacognition in the classroom and is the author of the Effortless Mindfulness Toolkit, an open resource for educators published on CUNY Academic Works. Prior to teaching, he spent nearly 30 years in investment banking. He holds an M.A. in Philosophy from Columbia University and a B.S. in Managerial Economics and French from Carnegie Mellon University.

References

80,000 Hours. (2025, March 10). When do experts expect AGI to arrive? 80,000 Hours. https://80000hours.org/2025/03/when-do-experts-expect-agi-to-arrive/

Anthropic. (2025, May 22). Activating AI Safety Level 3 protections. Anthropic. https://www.anthropic.com/news/activating-asl3-protections

Arsmart. (2025, February 14). AI in education: Statistics, facts & future outlook [2025 update]. Artsmart. https://artsmart.ai/blog/ai-in-education-statistics-2025/

Business Insider. (2025, May 12). AI professor urges students to cultivate curiosity in coding and career planning. Business Insider. https://www.businessinsider.com/ai-professor-anima-anandkumar-curiosity-coding-student-advice-caltech-jobs-2025-5

Canadian Education and Research Institute for Counselling. (2020, October 13). Leveraging the power of mindfulness in career development. CERIC. https://ceric.ca/2020/10/leveraging-the-power-of-mindfulness-in-career-development/

Cengage Group. (2024, July 23). Higher education’s value shift: Cengage Group’s 2024 employability report reveals growing focus on workplace skills & GenAI’s impact on career readiness. Cengage Group. https://www.cengagegroup.com/news/press-releases/2024/cengage-group-2024-employability-report/

Cimpanu, C. (2025, March 12). AI coding assistant refuses to write code, tells user to learn programming instead. Ars Technica. https://arstechnica.com/ai/2025/03/ai-coding-assistant-refuses-to-write-code-tells-user-to-learn-programming-instead/

Cleveland Clinic. (2023, September 20). The gut-brain connection. Cleveland Clinic. https://my.clevelandclinic.org/health/body/the-gut-brain-connection

Deloitte. (2023). State of AI in the enterprise (5th ed.). Deloitte Insights. https://www.deloitte.com/us/en/what-we-do/capabilities/applied-artificial-intelligence/articles/state-of-ai-2022.html

Deloitte. (2024, Q4). State of GenAI: Q4 2024 report. Deloitte Insights. https://www2.deloitte.com/content/dam/Deloitte/us/Documents/consulting/us-state-of-gen-ai-q4.pdf

Eyal, N. (2014). Hooked: How to build habit-forming products. Portfolio/Penguin.

Klein, E. (Host). (2025, April 2). The Ezra Klein Show [Audio podcast episode]. The New York Times. https://podcasts.apple.com/us/podcast/the-ezra-klein-show/id1548604447?i=1000708298931

Menand, L. (2025, March 8). Will the humanities survive artificial intelligence? The New Yorker. https://www.newyorker.com/culture/the-weekend-essay/will-the-humanities-survive-artificial-intelligence

Mollick, E. (2024). Co-intelligence: Living and working with AI. Portfolio/Penguin Random House.

Muhammad, G. (2023). Unearthing joy: A guide to culturally and historically responsive teaching and learning. Scholastic.

National Association of Colleges and Employers. (2023). Career readiness defined. NACE. https://www.naceweb.org/career-readiness/competencies/career-readiness-defined/

OpenAI. (2023, March 14). GPT-4 technical report. arXiv. https://cdn.openai.com/papers/gpt-4.pdf

Roumeliotis, G. (2025, August 28). Musk’s xAI forays into agentic coding with new model. Reuters. https://www.reuters.com/business/musks-xai-forays-into-agentic-coding-with-new-model-2025-08-28/

Storytelling Edge. (2025, January 30). Why storytelling will be the superpower in the age of AI. Storytelling Edge (Substack). https://storytellingedge.substack.com/p/why-storytelling-will-be-the-super?utm_campaign=post&utm_medium=web&triedRedirect=true

Thompson, C. (2025, February 3). Are we taking AI seriously enough? The New Yorker. https://www.newyorker.com/culture/open-questions/are-we-taking-ai-seriously-enough

World Economic Forum. (2025, January 20). 2025: The year companies prepare to disrupt how work gets done. World Economic Forum. https://www.weforum.org/stories/2025/01/ai-2025-workplace/